The X3F format images thatthe SIGMA SD10 camera produces are some form of compressed RAW format. It can be dumped out as decoded TIFF files using the

x3f_extract software from the toolkit available at

http://www.proxel.se/x3f.html

I have taken a set of test images of a test card with the internal IR filter removed from the camera, and a Wratten 25A red filter on the lens (and a standard UV-blocking filter). I exposed from a normal setting and with longer and longer exposure times until the images were evidently saturated in all colours, judging from the little histogram on the back of the camera.

After conversion to 16-bit TIFF and reformatting to FITS the mages were inspected and the histograms plotted. As exposure times increased the high edge of the histogram wandered up until it hit some sort of barrier near 10000 counts. This must be the actual saturation level of the camera. It is advertised as 12-bit but that would imply a ḿaximum count near 4096. So it seems we have maybe one bit more than 12 -> 13.

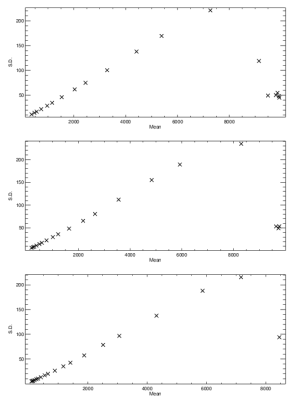

This is a plot of mean value vs standard deviation of a selected square on the test card. There is a linear relationship between S.D: and the mean value … I thought it was mean value and variance … but it breaks down at high mean values.

Added later: Ignore the plot. Look here instead: link